Our production environment consists of SQL Server 2008 R2 with several databases across multiple SQL Sever instances. We follow a somewhat old school approach to deployment, wherein once a project is past QA and in the Stage/UAT environment, we no longer create and deploy builds in a cumulative fashion. When bugs are found in Stage/UAT environment, the Builds to fix those bugs (iterative cycle) in Stage/UAT are preserved and deployed sequentially , as-is in Production as well. If we needed 10 iterations (hence 10 builds) to fix a bug in Stage/UAT, we will deploy the same 10 builds to Production sequentially !

The Problem:

This tediously meticulous approach to deployment guarantees the repetition of the same successful path to deployment in production (in theory), that was taken in Stage/UAT environment. It leads to same quality of code being deployed to production, as was deployed to Stage/UAT and hence is expected to produced the same results (in theory). However, when the number of iterations needed to fix all bugs in Stage/UAT is large enough that we routinely end up with builds running into double digits. Efficiently and accurately deploying 10 plus builds to production, within a relatively short deployment window was starting to become a challenge for us (Our DBA is not only expected to log deployment results, but proceed with next script ONLY upon success of previous script). While we were not ready to fully automate the execution of our deployment scripts via a batch run , we needed a command line method for deploying our SQL scripts relatively fast , where the execution messages are not only captured in a log file, but also displayed on the screen. This would not only let our DBA identify if a script’s execution encountered any errors, without having to open up the log file, but also help execute the deployment faster than using a fully manual, SSMS based deployment approach.

The solution:

Our first attempt was using SQLCMD to achieve a fair degree of automation and speed up the deployment time, by reducing manual work. I have a simple test script here with a few PRINT statements , one simple SELECT statement that executes successfully and another simple SELECT statement that fails due to non-existent table (to simulate a script failure scenario). Do take note that my script uses SQLCMD variable “:on error exit” ,which causes the batch to stop execution upon encountering an error . I have named the script quite creatively as “test.sql”.

USE Demo;

GO

:on error exit

PRINT N'Deploying Demo Script...';

GO

SELECT COUNT(*) FROM [dbo].[demo_order];

GO

PRINT N'Running query against non-existing table...';

GO

SELECT COUNT(*) FROM [dbo].[does_not_exist];

GO

PRINT N'This PRINT should not run as previous query errors and batch should exit...';

GO

When run in SSMS, this script produces the following output, and exits the batch upon encountering the first error as expected ;

Deploying Demo Script...

(1 row(s) affected)

Running query against non-existing table...

Msg 208, Level 16, State 1, Line 2

Invalid object name 'dbo.does_not_exist'.

** An error was encountered during execution of batch. Exiting.

The quickest way to automate the execution of my test script, is to use SQLCMD via the command line. Note the “-b” option used in my SQLCMD command string, which forces the termination of batch upon encountering errors. This is functionally similar to using “:on error exit” SQLCMD variable within the script itself. Here is the simple command line string ;

sqlcmd -S WKS18176\SANIL_2012 -d Demo -b -i test.sql -o test.sql.log.txt

When this SQLCMD command string is executed in the command prompt, it created the log file documenting the error message and the fact that batch was terminated .However, note that the command prompt screen shows no indication of success or failure of the script.

Unless our DBA opens up the log file “test.sql.log.txt” for review, he cannot see the execution and error messages as seen below. (I could use the “type” command on the next line here but we prefer to have a single line command )

Changed database context to 'Demo'.

Deploying Demo Script...

-----------

12

(1 rows affected)

Running query against non-existing table...

Msg 208, Level 16, State 1, Server WKS18176\SANIL_2012, Line 2

Invalid object name 'dbo.does_not_exist'.

This is where PowerShell came to our rescue. With minor modification to my SQLCMD command itself, and adding a PowerShell cmd-let, we were able to not only log the execution messages into a file, but also display them on the PowerShell screen, without losing any functionality related to exiting the batch upon error.

sqlcmd -S WKS18176\SANIL_2012 -d Demo -b -i test.sql | Tee-Object -file test.sql.log.txt

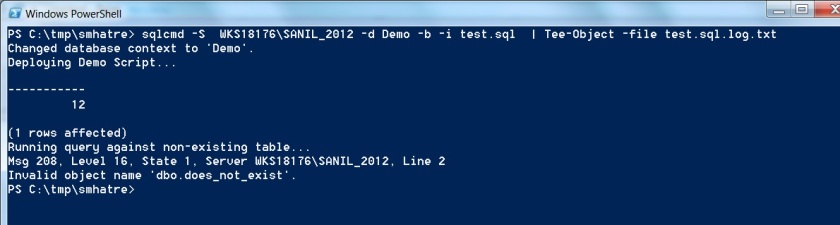

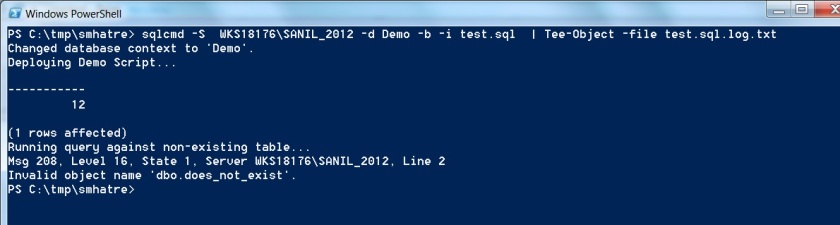

Here is a screenshot of executing my test script via PowerShell.

This was my first time using PowerShell and I am impressed how quickly we were able to learn and use it. Over the next few weeks, I am going to take up exploring PowerShell and learn how I can apply it to ease some more of our automation pain points !

References:

0.000000

0.000000